How do you prove something is true? For mathematicians, the answer is simple: Start with some basic assumptions and proceed, step by step, to the conclusion. QED, proof complete. If there’s a mistake anywhere, an expert who reads the proof carefully should be able to spot it. Otherwise, the proof must be valid. Mathematicians have been following this basic approach for well over 2,000 years.

Then, in the 1980s and 1990s, computer scientists reimagined what a proof could be. They developed a dizzying variety of new approaches, and when the dust settled, two inventions loomed especially large: zero-knowledge proofs, which can convince a skeptic that a statement is true without revealing why it is true, and probabilistically checkable proofs, which can persuade a reader of the truth of a proof even if they only see a few tiny snippets of it.

“These are, to me, two of the most beautiful notions in all of theoretical computer science,” said Tom Gur, a computer scientist at the University of Cambridge.

It didn’t take long for researchers to try combining these two types of proof. They won a partial victory in the late 1990s, using lesser versions of each condition. For decades, no one could merge the ideal version of zero knowledge with the ideal version of probabilistic checkability.

Until now. In a paper that marks the culmination of seven years of work, Gur and two other computer scientists have finally combined the ideal versions of the two kinds of proof for an important class of problems.

“It’s a very important result,” said Eli Ben-Sasson, a theoretical computer scientist and founder of the company StarkWare, which develops cryptographic applications of zero-knowledge proofs. “It solves a very old and well-known open problem that has baffled researchers, including myself, for a very long time.”

Check, Please

The story begins in the early 1970s, when computer scientists began to formally study the difficulty of the problems they were asking computers to solve. Many of these problems share an important property: If someone finds a valid solution, they can easily convince a skeptical “verifier” that it really is valid. The verifier, in turn, will always be able to spot if there’s a mistake. Problems with this property belong to a class that researchers call NP.

To understand how such verification can work, consider this classic NP problem: Given a map divided into different regions, is it possible to fill it in using just three colors without giving adjacent regions the same color? Depending on the map, this problem can be notoriously difficult. But if you manage to find a valid coloring, you can prove it by showing a verifier a properly colored map. The verifier just needs to glance at every border.

A decade later, two graduate students pioneered a different way to think about mathematical proof. Shafi Goldwasser and Silvio Micali, both at the University of California, Berkeley, had been wondering whether it was possible to prevent cheating in an online poker game. That would require somehow proving that the cards in each player’s hand were drawn randomly, without also revealing what those cards were.

Goldwasser and Micali answered their own question with a resounding yes by inventing zero-knowledge proofs in a seminal 1985 paper, co-authored with the University of Toronto computer scientist Charles Rackoff. The following year, Micali and two other researchers followed up with a paper showing that the solution to any problem in NP can be verified using a specific kind of zero-knowledge proof.

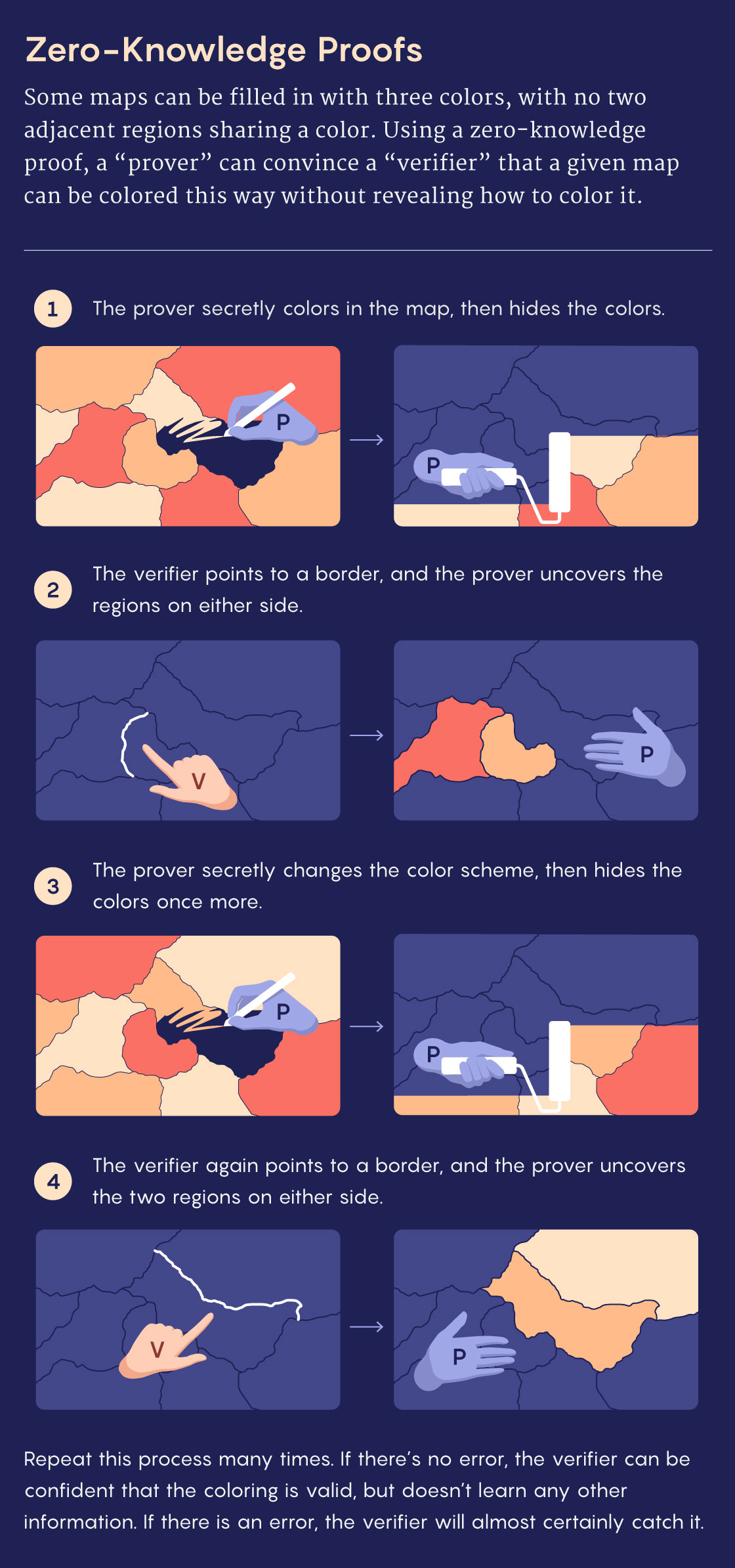

To get a sense of these proofs, suppose that you once again want to convince a verifier that a particular map is three-colorable — but this time, you don’t want the verifier to learn how to color it themself. Instead of drawing an example, you can prove it through an interactive process. Start by coloring in the map, and then carefully cover every region with black tape, leaving only the borders visible. The verifier then picks a border at random, and you’ll uncover the regions on either side, revealing two different colors.

Now repeat this process many times, randomly switching up the color scheme before each round so that the verifier can’t piece together any consistent information about your solution. (For example, swap red and blue regions and leave green regions unchanged.) If you’re bluffing, the verifier will eventually find a spot where the map isn’t properly colored. If you’re telling the truth, you’ll be able to convince them beyond a reasonable doubt in about as much time as it would take to verify a proof using the standard approach.

Mark Belan/Quanta Magazine

This zero-knowledge proof is strikingly different from the standard approach in two ways: It’s an interactive process rather than a document, and each participant relies on randomness to ensure that the other can’t predict their decisions. But because of that randomness, there’s now always a chance that a flawed proof will be deemed valid. Still, it’s easy to make that probability extremely small, and computer scientists quickly got over their discomfort at this looser definition of proof.

As Amit Sahai, a computer scientist at the University of California, Los Angeles, put it, “If the chance that something is not correct is less than one out of the number of particles in the universe, it seems reasonable for us to call that a proof.”

Pretty Cool Proofs

Researchers soon realized that randomized interactive proofs could do more than just hide secret information. They also enabled easy verification for problems much harder than those in NP. One type of interactive proof even worked for all problems in a class called NEXP. With ordinary proofs, the solutions to these problems can take as long just to verify as the hardest NP problems take to solve.

The proof revolution culminated in one final surprising discovery: You can still get the full power of interactive proofs without any interactions.

In principle, removing interactivity is straightforward. “The prover lists out all the possible challenges he could ever get from the verifier, and then just writes out all of his answers ahead of time,” Sahai said. The catch is that for complicated problems like the hardest ones in NEXP, the resulting document would be enormous, far too long to read from start to finish.

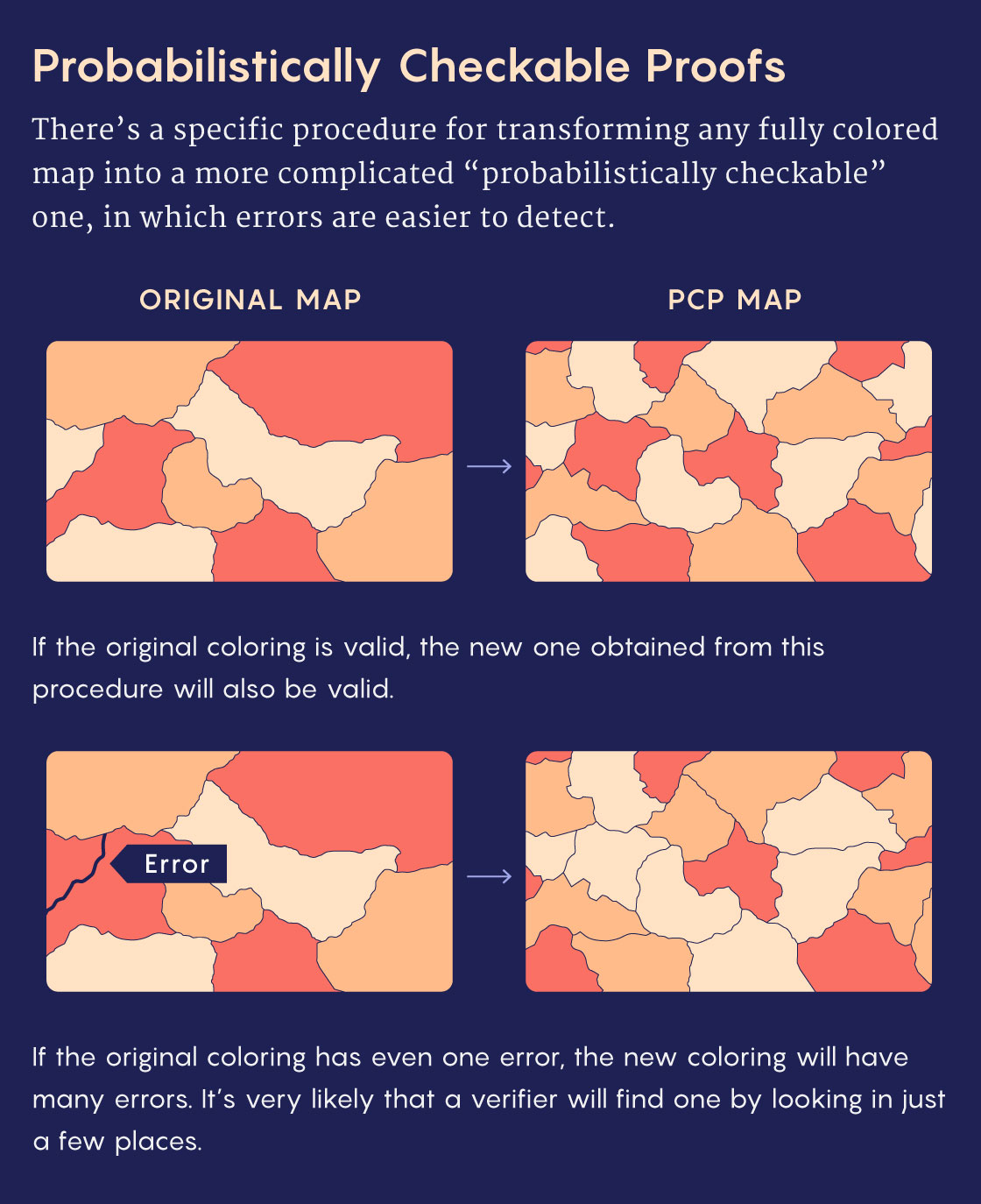

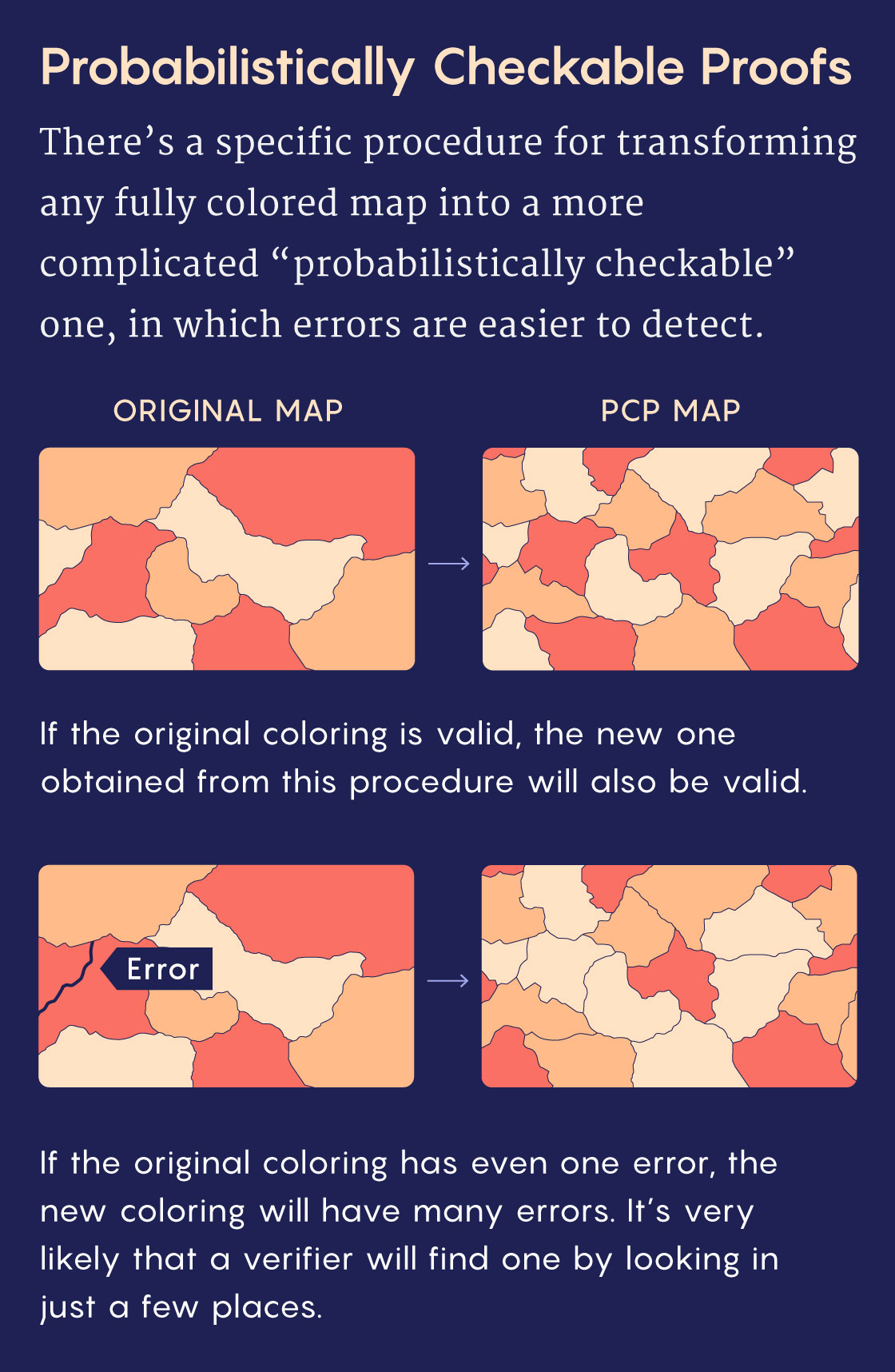

Then in 1992, the computer scientists Sanjeev Arora and Shmuel Safra defined a new class of noninteractive proofs: probabilistically checkable proofs, or PCPs. Along with other researchers, they showed that any solution to a NEXP problem could be rewritten in this special form. While PCPs are even longer than ordinary proofs, they can be rigorously vetted by a verifier who only reads small snippets. That’s because a PCP effectively multiplies and distributes any error in an ordinary proof. Trying to find an error in a normal proof is like hunting for a tiny dollop of jam by nibbling on a slice of toast. A PCP “spreads the jam uniformly over the piece of bread,” Gur said. “Wherever you take a bite, it doesn’t matter, you will always find it.”

Mark Belan/Quanta Magazine

The crucial element was, again, randomness — the verifier’s choice of snippets would have to be unpredictable, to ensure that a dishonest prover couldn’t hide inconsistencies anywhere.

Arora, Safra and others showed that PCPs could also dramatically speed up verification for more common NP problems. Soon after, Arora and four other researchers improved PCPs further, pushing the speed of NP proof verification to a theoretical limit — a celebrated result known as the PCP theorem.

“This is considered one of the big achievements of theoretical computer science,” said Yuval Ishai, a cryptographer at the Technion in Haifa, Israel.

The road to the PCP theorem had been anything but straightforward. Researchers started with zero-knowledge proofs for NP problems that used both interactivity and randomness. Then they realized that similar proofs could be used to verify the solutions to far harder problems. Finally, they showed that by transforming those proofs into noninteractive PCPs, they could verify a solution in less time than it would take to just read the proof. Computer scientists were feeling triumphant.