THE world university rankings released recently on 10th October. No Indian university managed to reach the Top 250. The old IITs have been boycotting these rankings for years due to it’s lack of transparency. IISc however continues to participate in the rankings.

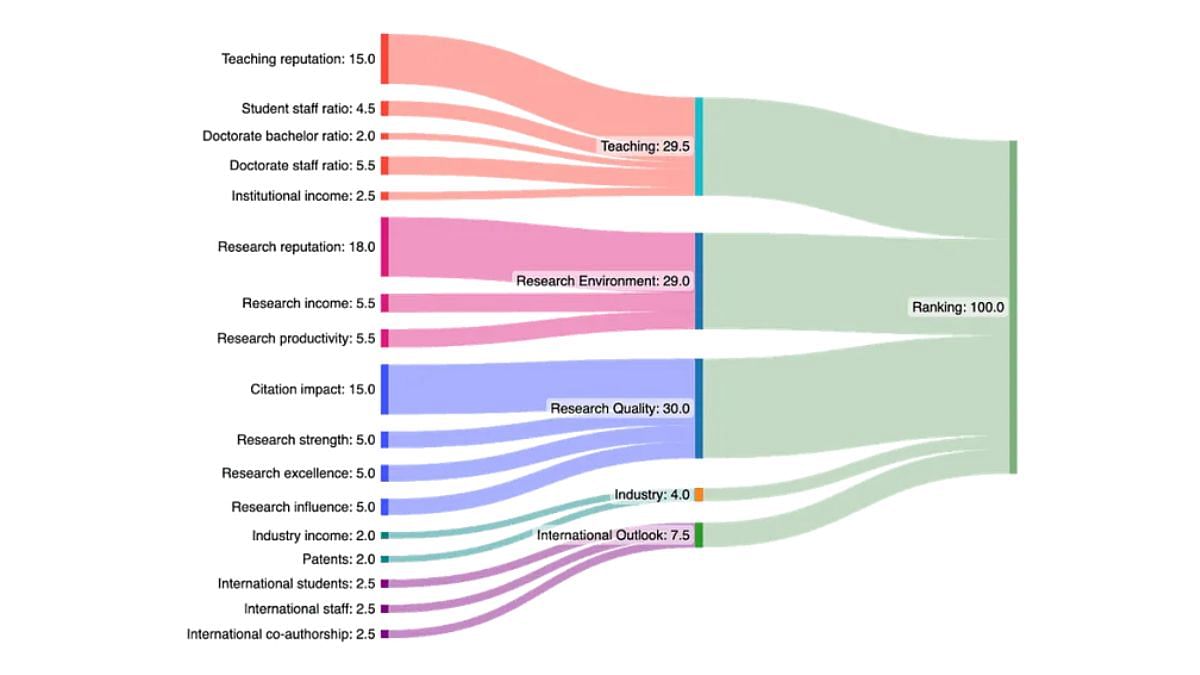

Some really surprising things happened in this year’s rankings which again put the soundness of the rankings in question. 30% of the rankings is based on ‘Research Quality’. Here’s the weightage of all the parameters (Fig 1).

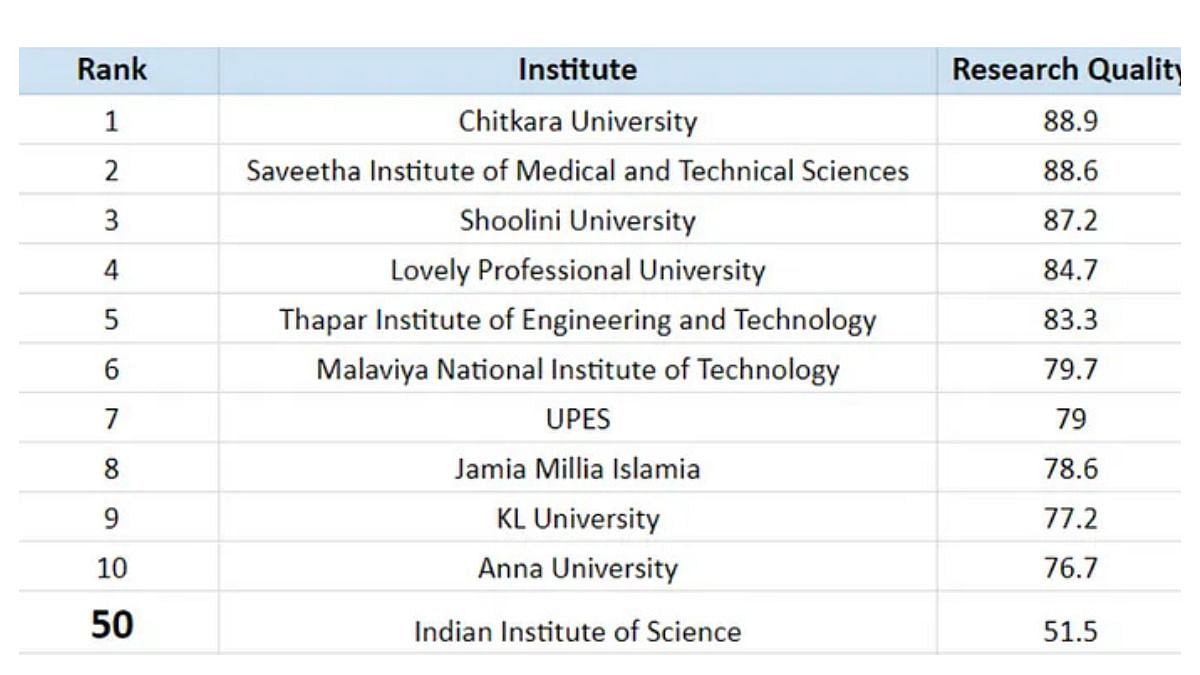

This led to a lot of noise on social media (Linkedin and X)and calls for IISc to boycott these rankings. However, what is more interesting is why did the rankings throw up such seemingly absurd numbers? Was it a bug or a feature of the rankings? It depends from whose point of view you are looking.

Phil Baty, Global Affairs Officer at THE, had this to say when this table was shared with him “Most controversially, are mixed results in the “research excellence” category — which is based on a worldwide analysis of over 18 million research publications and over 150 million citations to those publications, all from Elsevier’s Scopus: an eye on global research.

While scores are outstanding for “research influence” (which examines the quality of papers citing IISc’s papers) and for “research excellence” (which looks at the proportion of papers reaching the top 10% most highly cited), there is a weaker score for “citation impact”. This is Elsevier’s common “Field Weighted Citation Impact” metric which examines the average number of citations per paper, normalised for over 300 disciplines and for the type and year of publication. This metrics rewards the quality of papers, not quantity, so small volumes of highly cited research can provide a strong score for smaller institutions”.

When asked if FWCI included self citations, he confirmed that it included self citations. That is clearly a mistake. Here’s why:

50% of the Research Quality comes from a parameter called Field Weighted Citation Index (FWCI) calculated by the publisher Elsevier using it’s tool called Scopus. Citations are considered as a proxy for the quality of research. More citations means more people are using your work, and thus it has more ‘impact’.

THE, in all it’s wisdom, chose to include self citations while using this metric. Self citations for the uninitiated is when a researcher or his university colleagues cite themselves. Clearly that should not be included if one is trying to measure quality. Otherwise people will keep citing themselves and inflate their citation count. In fact Saveetha Institute (second in the list) has been internationally flagged for self citations by the reputed Science magazine.

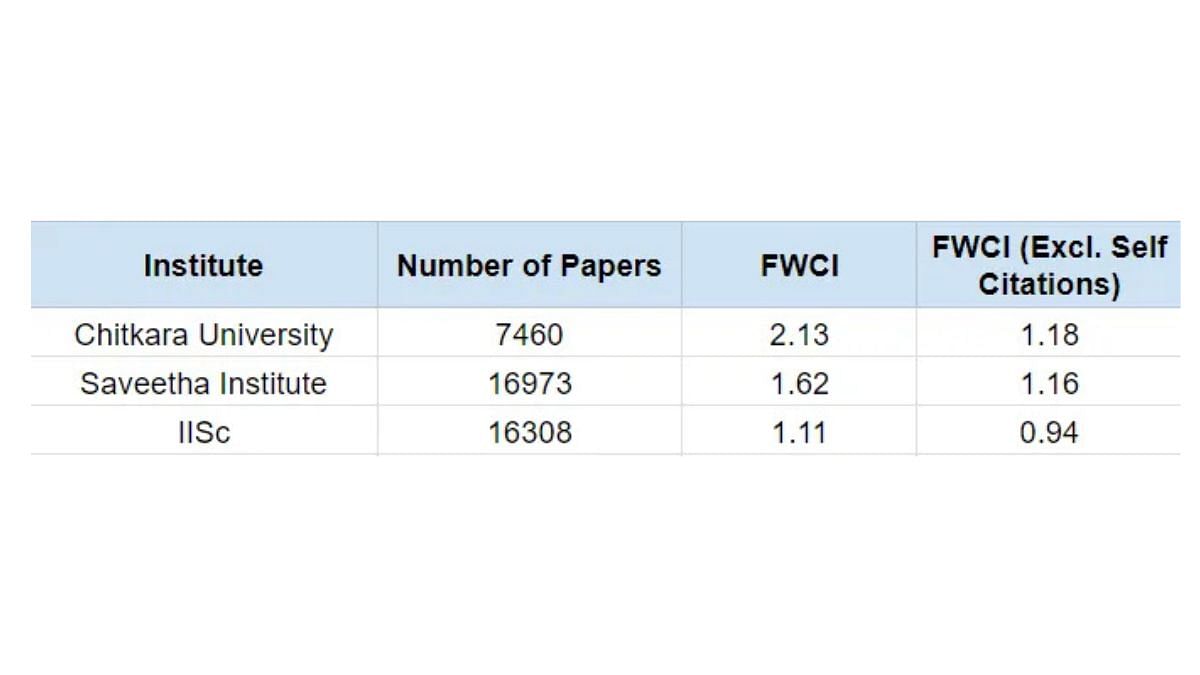

This meant that scores of universities who do lot of self-citations was much more than the ones who don’t artificially inflate their citation count. How much difference does that make? Here’s a table comparing the FWCI for Chitkara, Saveetha and IISc including and excluding self-citations. The gap narrows considerably and the difference is not so glaring at least. Still, IISc continues to perform worse than the other two. A possible reason for it could be the use of only Scopus database.

Table 2 — Effect of excluding self citations, the gap narrows considerably. Source — Scopus

Also read: NIRF parameters ill-designed & lead to absurd university ratings. Govt must order a review

Another possible reason for the absurd numbers presented in the table could be the use of Scopus. The tool only counts citations from other papers listed in Scopus Database (28000+ Journals). The alternative, SCI, is more selective (9000+ Journals) and considered having better quality journals than Scopus. Scopus on the other hand has more extensive coverage. This also means that some low-quality journals manage to sneak in the scopus database.

IISc researchers presumably publish in higher quality journals which might not necessarily be indexed by Scopus. Their citations might also be coming from higher quality journals which again don’t get reflected in the Scopus database.

Moreover Scopus database being more permissive can allow instances of papers with 4.5 pages of citations (Source : Smut Clyde, X). This can be exploited by the universities to again inflate their citation count.

Another 16% of the Research Quality parameter comes from the same measure, FWCI. The only difference is that they consider the 75th percentile paper to avoid giving more points to universities with few extremely highly cited papers. It still suffers from the same problems as mentioned above; self citations and Scopus Database quality.

The amount of manipulation that is happening in research to game these rankings will boggle your mind. You can read the posts by this anonymous X handle (Angela Hong). A lot of the posts are about Chitkara University much before they became number 1 in research in India.

It’s not clear if THE team were incompetent to understand these issues and modify their parameters or they willfully left such parameters unchecked. In any case, older IITs have made a wise choice to not participate in these rankings lest they would be humiliated like IISc was this year.

To be fair to THE, it is really difficult to measure Research quality. One needs a much more sophisticated measure which takes into account all kinds of gaming being done by the universities. There is no guarantee that the new measure they create will not be gamed either.

In this article we have only mentioned the research parameter. Allegedly, a lot of other kinds of data manipulation (number of students, number of international students, number of faculty) also happens in other parameters.

Rankings have become one of the biggest marketing tools for universities and they do everything in their power to climb up in them. Students, parents and policymakers must be aware of the pitfalls and futility of such rankings and not base their decisions on them.

Achal Agrawal is the founder of India Research Watch. Views are personal. This article was originally published on Medium.